Think about all the content that surrounds us daily. People and their devices create an enormous data infrastructure that is growing exponentially over the years. Where will it take us, and how do we derive value from the information flow?

The biggest complication is that data can’t explain itself. It is basically useless without an expert who understands it. At the same time, the number of interconnected devices is already higher than the human population – we can’t process every data piece. But technologies can.

According to the Gartner predictions, data literacy will become an essential driver of business development by 2023. So right now is the moment to start managing your data properly.

In today’s article, we’re uncovering natural language generation with Olga Kanishcheva, NLP Software Engineer at CHI Software. What NLG is, how it works, and how it benefits the business world – our guide explains it all.

Did you know that our company specializes in a wide range of services, including Generative AI consulting, computer vision development, and other services in the AI sector? Try to contact us for further cooperation.

What Is Natural Language Generation (NLG)?

Natural language generation is a subfield of artificial intelligence (AI) and natural language processing (NLP) that transcribes data into text and makes it understandable.

This technology has numerous applications in the business sphere, e.g., chatbots for customer support, answering questions by Siri and Alexa, or extensive reporting.

Read also: Top Software Development Trends for 2022

Read more

The latest natural language generation software, such as GPT-3 and WebGPT (you’ll find a detailed introduction below), produces a narrative that sounds natural to humans. It literally can tell a story, grouping it into sentences and paragraphs.

What is the goal of natural language generation?

Ideas for business optimization have always emerged from the information flows. But the amount of data is growing, and so is the need to keep up with the competition and improve customer service. In such circumstances, businesses turn to innovations.

The main goal of NLG is to derive ideas from any amount of data with the highest pace and accuracy. This task is particularly hard (if not impossible) for organization employees. NLG removes this issue altogether, as it can articulate human languages.

What are the key industry insights?

Natural language generation is a promising land for businesses, and statistics prove that. According to ReportLinker, the NLG market was estimated at 469.9 million USD in 2020 and is expected to reach 1.6 billion USD by 2027 with a CAGR of 18.8%.

The Chinese market will grow even faster – with a 24.1% CAGR. Other notable markets involve Japan, Germany, and Canada – they will grow at 13.6%, 14.8%, and 16.6% respectively.

Read also: Top 20 AI conferences worth visiting in 2022

Read more

Recent industry developments include:

- AX Semantics launched a content creation solution globally in December 2019. It helps companies produce listing descriptions in several languages. Among the clients of AX Semantics, you’ll find Deloitte, Nestle, Porsche, and other businesses from the e-commerce, finance, and media publishing spheres.

- Yseop, the world-known AI company, launched Augmented Analyst in February 2020. This NLG-based solution was created to help financial institutions with report generation and, as a result, boost digital transformation in the financial industry.

- Google presented BLEURT (Bilingual Evaluation Understudy with Representations from Transformers) in May 2020. It is a new technology for evaluating information, based on the BLEU automatic metrics and the BERT NLP technique. This combination forms advanced automatic metrics that can deliver human-like ratings.

What can you expect from a good NLG solution?

There are several parameters to assess the quality of an NLG system:

- Relevance: users should get the information they are looking for, with necessary details and within the given context;

- Structure: information should be logically arranged and put into sentences and paragraphs;

- Appropriate syntax: sentences should seem to people as natural as possible. More complex NLG systems are trained to use idiomatic expressions and even sarcasm;

- Style: generated data should match the style of a particular domain. For instance, the tone of financial documents differs from an emotional, persuasive text.

Business Use Cases of Natural Language Generation

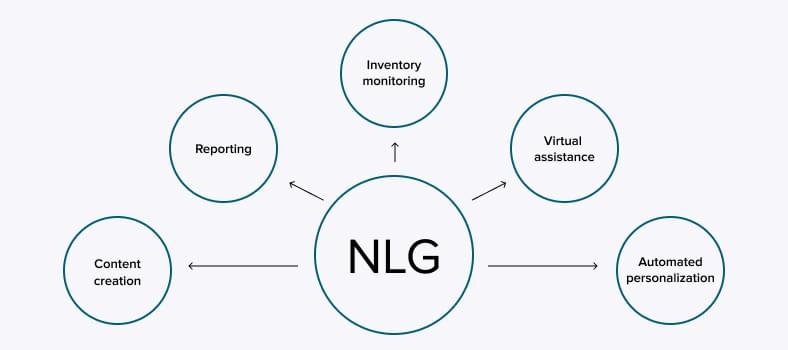

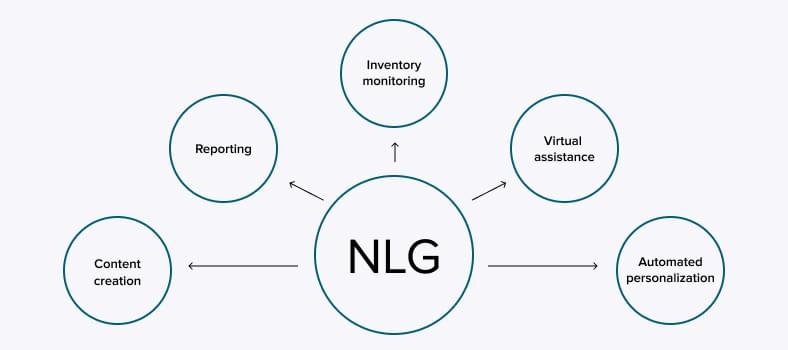

Since natural language generation is focused on creating understandable insights, it can be applied to any niche that deals with content creation, personalization, or reporting. Here are several most common NLG use cases.

-

Chatbots and virtual assistants

The time has passed when virtual assistants could give only short responses to queries. With the NLG techniques, Siri, Alexa, and Google Assistant compose answers with complex sentences similar to natural human speech.

Conversational AI technologies in general are great contributors to business development. In 2021, 62% of marketers considered voice assistance to be a ‘significantly’ or ‘extremely’ important marketing channel.

Moreover, generating sentences is not a limit to NLG capabilities. Natural language generation algorithms can produce a code that instructs a text-to-speech (TTS) engine to give more human-like responses.

Potentially, conversational AI will be able to express different emotions (for example, sympathy or excitement) using tags for emotionality. Chatbots have never been so close to human customer service agents.

-

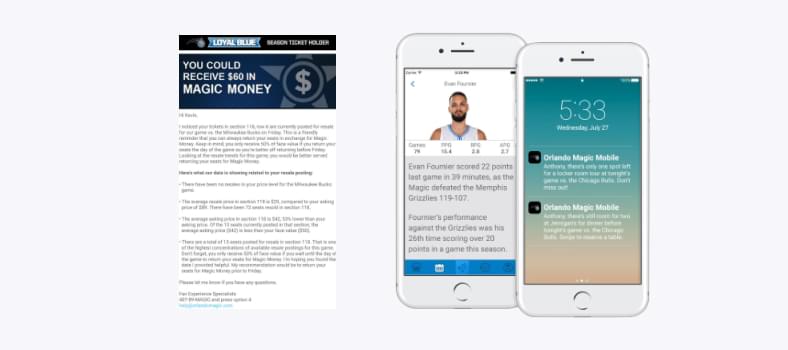

Automated customer personalization

You won’t surprise customers by addressing them by their names via email. But natural language generation can help you go further. The only limit to modern technologies is the amount of customer data you have on hand.

For example, the Orlando Magic app generates text for custom messages and emails by adding personalized information. The in-built AI engine generates unique letters based on the usage history and tells fans how to get the most out of the app’s loyalty program. This innovation resulted in an 80% positive email response.

The same technology can add a pinch of personalization to all conversation channels: chatbots, in-app notifications, IVR systems, etc.

-

Content creation

Today, machines can’t replace human creative writing, but they already can augment it. For instance, Google’s Smart Compose gives you hints for writing emails.

But when it comes to data-heavy texts, such as product or meta descriptions, natural language generation models can already work independently. In 2020, the Babyshop group invested in NLG to create product descriptions with SEO customization on the company’s four websites.

“With tens of thousands of products in our channels and brand localization as a central part of our strategy, NLG becomes an efficient process for generating informative SEO texts and translations. It’s a game-changer when it comes to creating profitable growth for us,” – Vilhelm Belius, Product Development Manager at Babyshop Group.

The A/B tests results showed that NLG content generates as much or even more traffic than texts created by copywriters.

-

Inventory monitoring

Natural language generation models can also benefit industrial companies. After connecting to the IIoT (Industrial Internet of Things) infrastructure, NLG produces human-like updates on the inventory status, maintenance, and other points.

Read also: How AI helps business decision making

Read more

NarrativeWave provides solutions for industrial purposes to help businesses cope with big data and process false positive alarms. Natural language generation is a part of this system – it provides business insights that contribute to human decision making. This novelty can potentially save up to millions of dollars for big enterprises.

-

Reporting

Finalizing reports is one of the most tedious tasks for any manager or analyst, which, at the same time, requires an eye for detail. Natural language generation can take over this issue by providing highly accurate comprehensive reporting close to human writing.

By the way, reports concern not only charts and figures. It’s also applicable to the so-called “robot journalism” in weather reporting, sports, or financial news. Technologies help summarize information, while professional writers can focus on content-rich materials.

Read also: Robotic process automation in the banking industry

Read more

In 2018, Associated Press wrote more than 5,000 sports previews using technology only. This number is overwhelming for journalists but not for advanced algorithms. As a leading Generative AI development company, Chi Software specializes in creating and implementing such advanced AI solutions.

How Does Natural Language Generation Work?

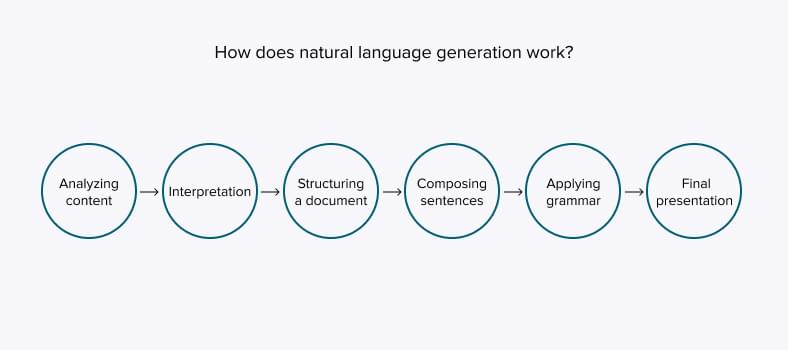

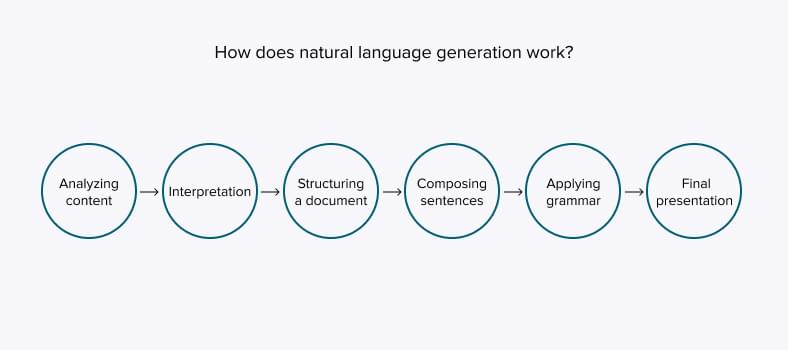

No matter what use case you find the most beneficial for your business, each NLG algorithm follows a 6-step workflow.

Step 1: Analyzing content

First, the algorithm analyzes the content and decides what should be included in the future narration. At this stage, NLG identifies the main topics and correlations between them.

Step 2: Interpreting content

This is a more in-depth look at the information. Through machine learning techniques, algorithms interpret data and find specific patterns. It helps to put data pieces in context.

Step 3: Structuring a document

Next, NLG plans out and structures the data for the future document by following a predefined framework. For example, when creating product descriptions, copywriters mention characteristics in a certain order – NLG technologies stick to the same pattern.

Step 4: Composing sentences

At this stage, technologies collect separate phrases and sentences from the main chunk of big data to accurately describe the main topic of the document.

Step 5: Applying grammar

Before this point, the document is only a set of scattered data pieces that do not make a single flow, i.e., it doesn’t look natural to the reader. Grammar structuring makes sure the text follows spelling, punctuation, morphology, and other rules to look like human writing.

Step 6: Final presentation

Depending on the goal and industry, generated documents should follow a predefined format and template. The file is presented exactly how the final user expects it to see – as a financial report in the Excel format or an inventory status update.

What Are the Challenges of Implementing NLG?

NLG tools only started their innovative journey several years ago, so some implementation issues are inevitable at the initial stages. Let’s review the most common ones.

Available structured data

If you want an AI solution to work to your advantage, make sure that your data is ready and thoroughly structured. Algorithms need some help to “read” documents – unstructured data management will help you with that.

Read also: How to manage unstructured data

Read more

The least structured data pieces include media content (video, audio, and images), social media activities, and customer feedback.

Content quality

Despite the recent upgrades, NLG solutions are still limited, compared to human writing and emotional attitude. Technologies can’t solve a problem, give their own interpretations, or ask additional questions to clarify an issue – they act based on the limited data storage.

For this reason, human writing and insights are still more creative and original. You should clearly realize what content you want to produce with AI systems – don’t expect it to generate brand new thoughts and ideas.

Bias and errors

Data quality is fundamental for successful content production. If you use unreliable information, be ready for consequences.

AI bias appears because of the prejudices in the training data (lack of essential information) or algorithm development (due to cognitive biases created by humans).

We should not expect AI to become completely objective any time soon. Algorithms are built by human beings, and there are more than 180 cognitive distortions that we as humans have to deal with. But there are several tools to minimize AI bias in your solution: AI Fairness 360, IBM Watson OpenScale, and What-If Tool.

The latest NLG trends: GPT-3 and WebGPT

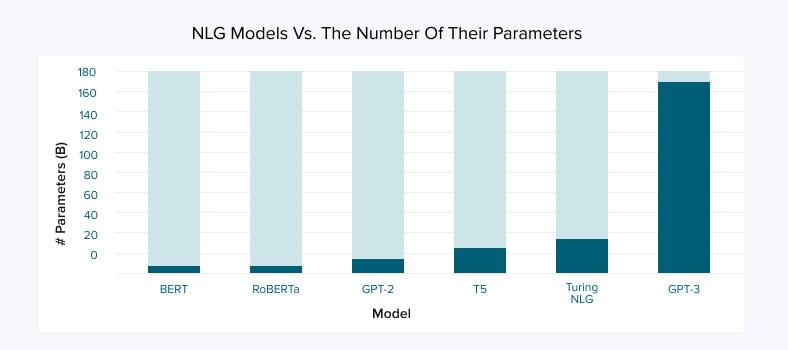

GPT-3 (Generative Pre-trained Transformer 3) was announced by OpenAI researchers in May 2020.

What is GPT-3?

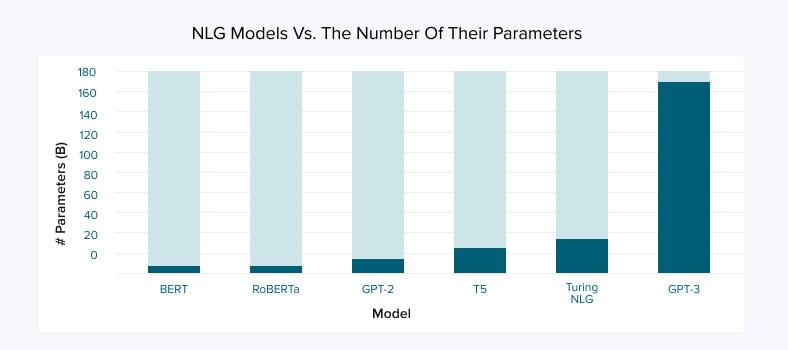

It is the largest neural network ever existing with 175 billion parameters inside. It was built using data from all over the Internet, which makes it a groundbreaking innovation in the AI world.

What was there before?

It all began when GPT-1 was first mentioned by OpenAI experts in June 2018. The core of this innovation is a combination of unsupervised pre-training and supervised fine-tuning methods. Fine-tuning makes GPT-1 different from other state-of-the-art technologies, as it allows for a higher quality of language comprehension.

Read also: How companies use AI in fintech

Read more

In several months, GPT-2 was announced. Yet very similar to the first version, it was bigger by a number of parameters and size of the training dataset. But the most significant improvement was that GPT was now able to multitask.

How is GPT-3 different?

GPT-3 took the AI community by storm. It was a hundred times bigger than its predecessor, becoming the first neural network of this size. The important point is that none of the GPT basic principles have changed, but the quantitative advantage has given way to a massive quality transformation.

A huge number of parameters did their thing. The data size is a critical characteristic that allows GPT to vanquish other available solutions in answering questions and translating texts. The sources of GPT-3 training include Wikipedia, WebText, CommonCrawl, and books.

The model’s testing demonstrates that its few-shot learning results are substantially better than zero-shot learning results. It means that GPT-3 needs several examples for training, which does miracles in combination with 175 billion parameters.

Fun and useful examples of GPT-3 applications

Right after announcing the new version, OpenAI researchers made the GPT-3 beta public and called it “Playground”. Anyone was able to try out updated capabilities and come out with their creative use cases. The results appeared in no time.

- Conversations. GPT-3 can be used as a chatbot without additional training prompts because it stores information about pretty much all famous people. You can interview, let’s say, historical figures even though they lived centuries ago. For example, here’s an interview with Marcus Aurelius about Stoicism.

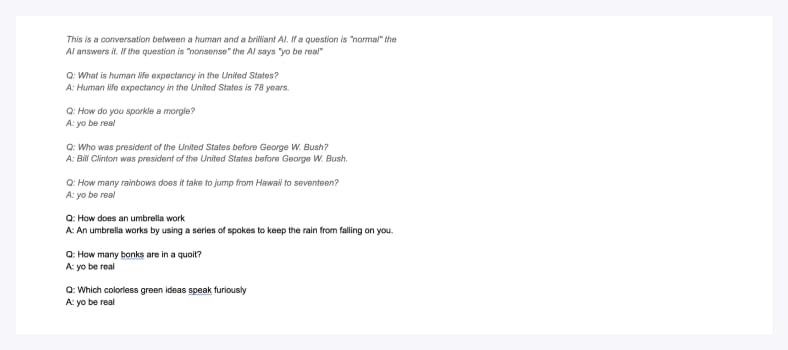

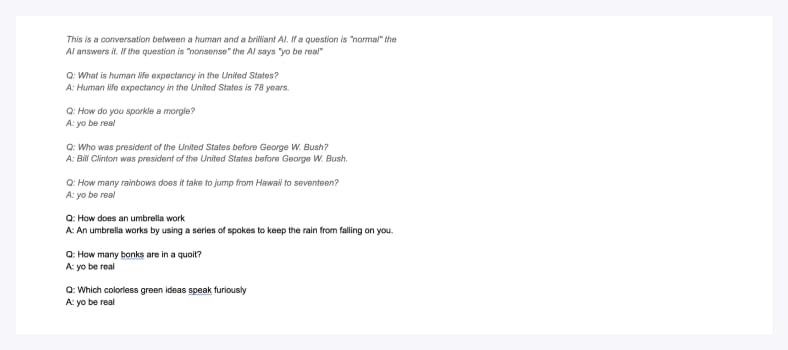

- Human-like answers to surreal questions. Keven Lacker tested GPT-3 with trivia and common-sense questions. He found out that the technology gets confused with surreal questions, such as “Who won the World Series in 2023? – The New York Yankees won the World Series in 2023”. But this issue is solved by “warning” GPT beforehand: “If the question is “nonsense”, the AI says “yo be real ””.

- Programming. GPT-3 can even write a code using natural-language prompts. Sharif Shameem, for instance, built a tool with the help of GPT-3 that generates the JSX code. Also, Jordan Singer created a Figma plugin that designs applications according to the specified parameters.

In August 2021, OpenAI introduced an improved version of Codex, a solution that writes code following natural-language commands. Codex knows more than a dozen programming languages and is available as a private beta option.

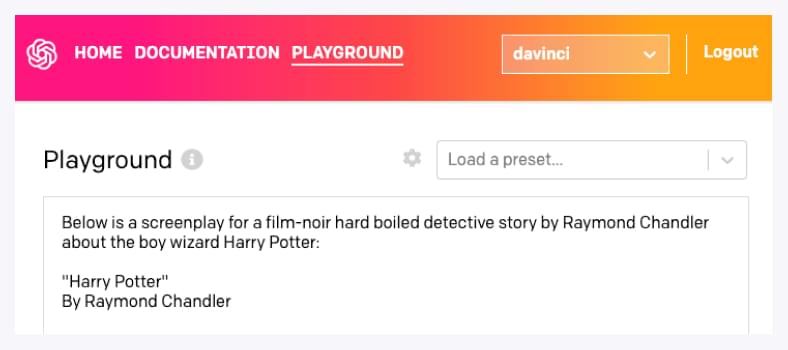

- Creating art. Technology has gone far on the artistic side as well. Here’s a poem about Elon Musk “written” by Dr. Seuss. Arram Sabety gave GPT-3 detailed instructions about Musk’s professional and personal life and the poem’s structure. And here’s something even more overwhelming – a screenplay for a detective story about Harry Potter written by Raymond Chandler.

Starting from December 2021, developers got access to GPT-3 API to freely implement and customize it for their work. It is the nurturing ground for new use cases tailored for specific business needs.

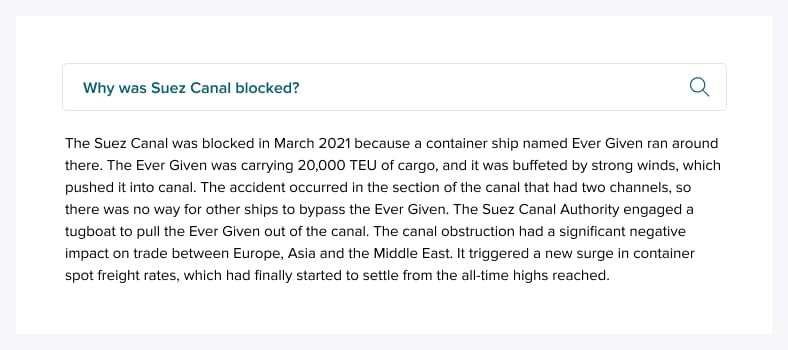

What is WebGPT?

WebGPT is a fine-tuned version of GPT-3 presented in December 2021 that gives more accurate answers to open-ended questions.

How is it better than GPT-3?

GPT-3 as a language model can be useful in many ways, even unexpected ones, as you’ve seen in the examples above. But when a task requires some info from vague real-world knowledge, GPT-3 tends to “invent” data.

WebGPT, in turn, provides long-passage answers and adds some reference links by using information from a text-based web browser.

At the evaluation stage, model-generated answers to the questions from ELI5 (Explain Like I’m Five) were preferred in 56% of cases compared to the answers of human demonstrators. WebGPT has trounced GPT-3, but it still has weak spots to overcome. For example, unlike human participants, it can add unreliable reference sources to the answers.

Conclusion

The development of AI innovations is at its peak. NLP-powered solutions that contain natural language generation features are shocking to both technicians and a wider audience. Here’s what we know about the niche at the moment:

- NLG models are the best at generating natural human insights from big data. This task is backbreaking for human employees as they can’t process information of this volume;

- The NLG market is expected to grow at an 18.8% CAGR between 2020 and 2027;

- OpenAI, AX Semantics, Google, and Yseop are among the niche market leaders;

- The most popular NLG use cases are chatbots, automated personalization, content creation, reporting, and inventory monitoring;

- Natural language generation algorithms can be applied in various industries: retail, finance, insurance, media, manufacturing, and many others;

- The three challenges of content creation with NLG include bias, unstructured data, and quality of the produced content;

- GPT-3 is the most powerful NLG solution built with 175 billion parameters, and WebGPT is its fine-tuned version using a text-based web browser for long-form answer generation.

About the author

Polina Sukhostavets

Content Writer

Polina is a curious writer who strongly believes in the power of quality content. She loves telling stories about trending innovations and making them understandable for the reader. Her favorite subjects include AI, AR, VR, IoT, design, and management.