AI voice identification has come a long way from a futuristic idea to something we use daily. In fact, the speech and voice recognition market is expected to hit USD 84.97 billion by 2032, up from USD 12.62 billion in 2023.

That’s why voice application development is becoming a must for businesses that want to stay competitive. If you plan to build audio recognition software, focusing on accuracy, user experience, and seamless integration with existing systems is important.

Now, let’s dive into how to develop a voice-enabled app that will work for your business.

Understanding Voice Recognition Technology

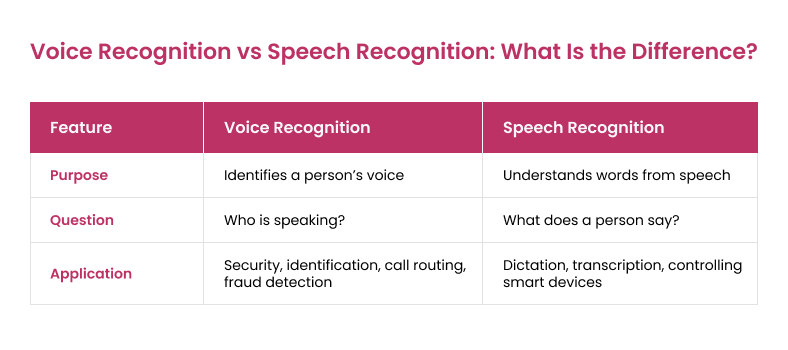

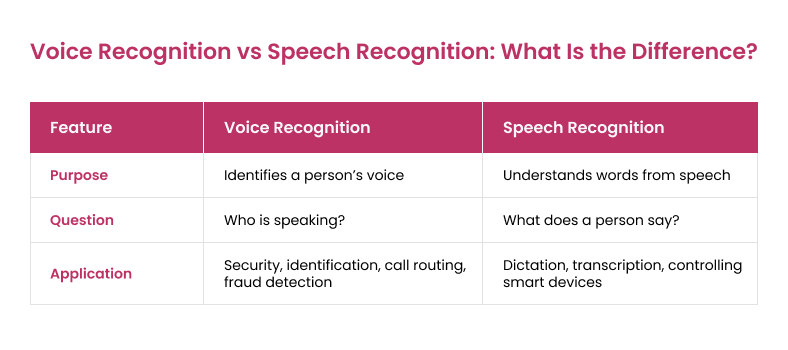

The first thing you need to know about voice recognition is that it’s not speech recognition. Despite seemingly interchangeable terms, these artificial intelligence (AI) technologies differ in use and internal mechanics. Let’s try to clear up the confusion.

- Speech recognition algorithms transform audio input into text. The main focus of speech recognition is to detect and understand any speech, making it a crucial component of custom chatbot development.

- Voice recognition analyzes audio input to find patterns and match those patterns with database knowledge to identify who speaks and how they sound.

Voice and speech recognition serve completely different purposes and are not intechangeable.

This crucial difference influences how the voice identification technology operates. It’s one thing to detect and understand speech – and completely another to detect who is speaking. To do that, voice recognition systems go through the next steps:

- Voice capture: the user speaks into the microphone;

- Feature extraction: the system analyzes the audio for features such as tone, pitch, speech speed, and other features;

- Feature matching: the system compares audio features to the ones stored in its database;

- Decision-making: the system calculates similarities between input and stored knowledge;

- Post-processing: based on the results, the system makes a decision about the speaker’s identity.

Now that we know the difference between automatic speech recognition and voice recognition, let’s cover what’s happening in the industry’s market.

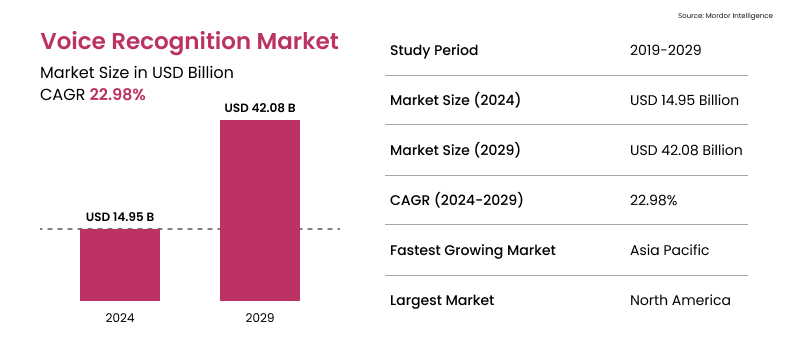

Trends on the Voice Recognition Market

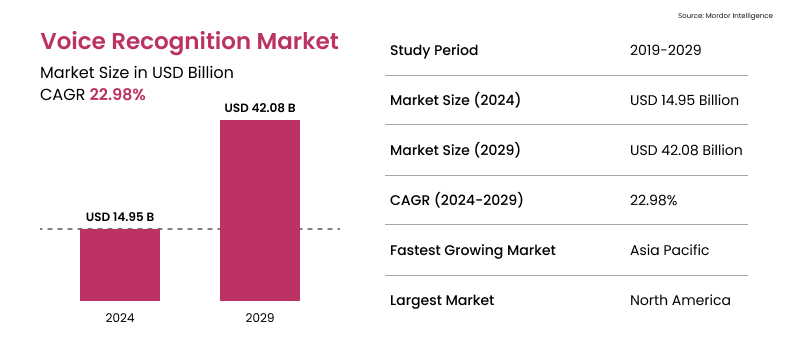

According to statistics, the market size of voice recognition is now 14.95 billion USD as of 2024, and growth is projected to reach 42.08 billion USD by 2029. The reason for this growth is the increasing demand and use of technology across industries.

The voice recognition market is likely to grow almost three times in ten years.

Voice verification software development is primarily used in retail, banking, and healthcare industries for either security or accessibility. At the same time, voice recognition is gaining traction in the linguistic industry. But what are the examples of this technology in the world?

Voice Biometrics

Everyone has seen it in movies: a person gets into a room or accesses valuable data when a computer hears a specific voice command. Well, it’s not just out of fiction anymore!

Using AI voice authentication as a security measure can protect users from identity theft and data breaches. It’s based on the core principle of how voice recognition works: analyzing audio input and matching it with audio data in the database. Nowadays, this method is widely used by the banking and healthcare sectors.

Transcription Software

For a long time, the biggest problem plaguing transcription software was difficulty distinguishing speakers when there is more than one voice in the audio recording. Marking who says what usually had to be done manually.

Voice identification software development paired up with AI speech recognition solves this problem. When transcribing a dialogue between people, today’s artificial intelligence (AI) software can now differentiate between speakers and highlight in text who says what.

If you’re interested in developing your own transcription software, we have great news for you! CHI Software is also experienced as an artificial intelligence development company, and we will happily help you out with the development!

Mobile Payments

40 years ago, there was only one payment method – cash. Today, customers don’t even need to have a physical credit card with them — they can just use their smartphone to pay. Today, we’re standing before a new payment method – voice payments.

Thanks to voice recognition technology, soon users will simply need to say the password for identity confirmation. This method already shows a lot of voice app development potential to make online purchases faster and safer.

Forensics and Criminal Identification

While this method isn’t used by the retail or banking sectors, it’s quite helpful for the criminal justice system. The thing about voice is that it’s almost like fingerprints – it’s quite rare to find two people who are a precise match.

Combining core mechanics of voice recognition with machine learning artificial intelligence (AI) could help out here, too. A voice recognition module comparing audio inputs of a suspect with audio data of criminals can make the life of law enforcement that much easier.

To sum up, voice recognition technology is gaining momentum, with no signs of stopping any time soon. But how do you develop voice recognition for your business solution? Here, we compiled a rough outline of what your voice-activated app development process should look like.

8 Steps of the Voice Recognition App Development Process

These development steps will allow you to better understand what your team is going to do and what results you may expect after each stage.

There are eight steps you need to take to create apps that use voice recognition. Let’s start with the first one:

Step 1: Define Objectives and Use Cases

The first thing you need to decide is the type of software you want to develop. There are two main types of voice-enabled apps:

- Speaker-dependent can recognize the voice of one user. To train it, you need to provide software with the voice signals from the user to be used as a reference database;

- Speaker-independent can recognize the voices of multiple users. Such systems don’t require prior training since they can identify different accents and pitches thanks to artificial intelligence (AI).

Both types serve different purposes. For example, speaker-dependent apps are widely used in security, while speaker-independent ones are used as voice assistants and chatbots.

Step 2: Research the Market and Choose APIs

Do your research and look into what voice-enabled apps already exist on the market and what they do. Additionally, you will need to decide what application programming interface (API) to use. Your choice will influence your voice application development project and features you aim to implement. Here are some of the most popular ones:

- Microsoft Azure Speech provides great voice and speech recognition features. This API is highly customizable to meet your business needs;

- Amazon Transcribe is an AWS service that can identify speakers and generate subtitles for video content based on speech recognition;

Step 3: Decide on App Architecture

Your app architecture depends on the problems the app aims to solve. For example, in mobile app development, you will use a different toolset from web-based applications.

The two main things you need to choose are programming languages and libraries for development. Let’s start with languages:

- Python is the most widely used for artificial intelligence (AI) development, including voice and speech recognition. It’s also one of the easiest languages to work with and is usually chosen because it supports most APIs and libraries. Choosing Python will allow you to easily integrate machine learning into any of your solutions;

- C++ is a good alternative if your main focus is high performance. Compared to other languages, it is considered to be the most efficient. Developers might choose it over other languages due to its frameworks and the ability to integrate with other languages;

- Java is your primary language if you’re looking for mobile voice and speech recognition software. It has a wide array of APIs and frameworks catered specifically for mobile app development.

If you’re interested in mobile app development, we have just the right team for you! We at CHI Software provide mobile development services that will turn your ideas into reality;

- JavaScript is one of the harder languages to work with – yet could be the best choice if you’re interested in web-based voice recognition software. It can integrate with almost every web API to provide users with voice recognition features.

Another tool you need to choose is libraries. Here are some of the most widely used:

- CMU Sphinx is written for Java, making it perfect for mobile app development; however, it can be integrated with any other language;

- PyTorch is a Python-based library that can convert speech to text and provide your solution with voice recognition capabilities;

- HTK is a library created by Microsoft. It’s mainly used for speech analysis and transforming speech into text.

From Generic to Specific: The Art of Training GPT to Suit Your Business

Read more

Step 4: Design User Interface

Just like any other app development, voice recognition solutions need to have a compelling user interface (UI). Here are some tips to consider:

- Understand your core audience, and your competitors’ designs;

- More does not mean better, simplicity is the key to a good UI;

- The color scheme should be consistent throughout the app;

- App navigation should be easy to understand;

- Think about adding alternative visuals to your app for colorblind users.

The UI creation process involves a lot of iterations and experimentation. Remember that the interface should be both functional and pleasant at the same time.

Step 5: Start the Development Process

This is where the magic happens. After APIs and libraries have already been chosen, it’s time to focus on AI training. Here are key points to focus on.

Data collection: While some businesses have been collecting data for years, you might lack sufficient amounts. There are two ways to fix it: web scraping and surveys.

- Web scraping can be done with resources like Google Dataset Search or Github, where you can find datasets for different purposes.

- Surveys are conducted among your target audience to gather as much information as possible.

Data cleaning: In many cases, collected data will have different formats and will need some restructuring. For AI development, data is unusable in raw form, so you will need to clean it. The data cleaning process focuses on formatting, cleaning duplicates and dealing with missing or corrupted data. Data cleaning is sometimes done automatically, but it is advised to check it manually after.

Data labeling: Clean data is labeled depending on the file’s contents and structured into a dataset, from which an AI model can be trained. Datasets are organized in terms of partitions and segments. Each partition is considered to be one processing node. Each segment contains files from many partitions, and partitions can have many files from different segments.

After the data is structured into datasets, you can start training an AI model. This process has the same steps for mobile app development since the AI model doesn’t care where it’s used. Parallel to AI training, you should start voice recognition app development. With UI implementation, your voice-enabled app development product will start to take shape.

Step 6: Test Your Software

After initial development is done, it’s time to test your solution. You should focus on fine-tuning your solution to make it work properly. Stability and UI are the other two points of interest at this stage. Here are some tips on how to achieve that:

- To test your app to the fullest extent, combine different types of testing;

- Since automated testing works on scripts, focus manual testing on unscripted and random scenarios;

- Some features are especially sensitive to code changes, they should be your primary focus;

- Some testing scenarios are tedious to test manually, so combine automation with artificial intelligence (AI) to save time.

Step 7: Deploy the Product

After bug fixing, it’s time to choose your deployment strategy and get ready to launch your product. There are a couple of options for how to do it:

- Blue-Green deployment allows developers to have two versions of your app at the same time. One is the current version (blue) and the other is the updated version (green). This allows for better version control and testing in a close-to-live environment;

- Canary deployment lets developers roll out smaller updates with a focus on specific features instead of doing the full release at once. This method enables better control over software’s performance and user feedback;

- Rolling deployment focuses on gradually replacing the old version with the new one. This reduces risks and allows for deployment without downtime.

After the software goes live, you might think that your job is done. In reality, there is still one more step in the app development process.

Step 8: Maintenance and Updates

To ensure a long life expectancy for your solution, you will need to constantly update your software. Here are some things you should focus on:

- Regularly maintain the app to ensure its longevity;

- Use version control systems for update management;

- Update the software’s security to cover all potential vulnerabilities;

- Fix bugs that weren’t noticed in testing phase and those that were reported by users;

- Optimize the app’s performance, since it influences user experience and leads to better user satisfaction;

- Support users with online consultations and educational materials for better understanding the app;

- Collect user feedback to adapt to the changing needs.

The last step of app development is iterative, and can continue as long as the software is considered profitable to maintain.

This sums up the rough outline of the voice recognition app development process. However, you might encounter challenges on your way. Let’s talk about them.

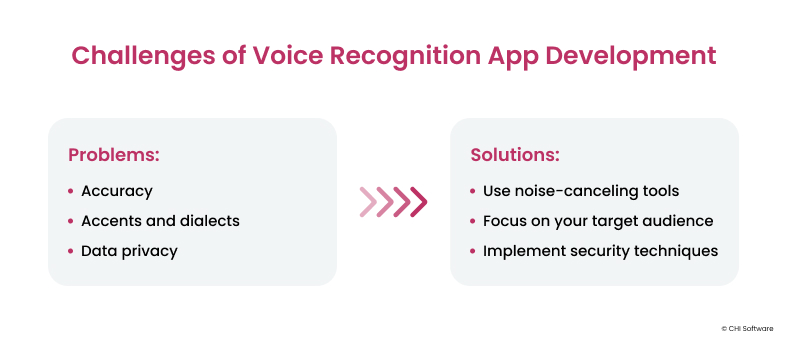

Challenges in Voice Recognition App Development

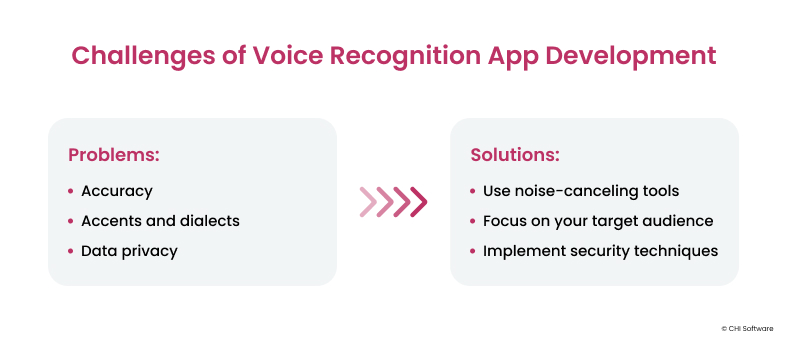

Software development is always a long and hard road. Depending on the type of software you are building, the challenges that you might face will vary. Let’s cover what awaits you in voice recognition app development and how to overcome obstacles.

Just like any type of development, voice recognition engineering has its challenges. But they are manageable if you have a reliable technical partner by your side.

Accuracy

Problem: The efficiency of your voice recognition system will define its usefulness. High accuracy requires clean sound input, which is challenging to capture due to background noise. This problem has two sides: input audio quality and quality of audio devices.

Solution: Implement noise-canceling tools that will “clean” the audio files so that the voice recognition system can properly detect who is speaking.

Accent and Dialects

Problem: There are numerous natural language processing (NLP) tools that can recognize voices. Still, the majority of language models are trained on data from American English speakers. This can create a problem when you try to develop a voice recognition system targeted at speakers of another language. Additionally, the user might speak with an accent or in a local dialect.

Solution: Since you can’t include every spoken language and its dialects and accents in your database due to the limited database size, you need to try another approach. Aim at your target audience and their dialects and accents. The more training you provide the language model with, the better voice recognition will work.

The Main Challenges of Machine Learning and How to Solve Them: Expert Advice

Read more

Data Privacy

Problem: Some users have concerns about artificial intelligence (AI) technology due to the sensitivity of biometric data. Improper database security might compromise users instead of protecting them.

Solution: Implement robust security mechanisms to make your software as safe as possible, including access controls and encryption, and creating a network security system via firewalls. Due to the nature of the data you collect, you will need to comply with regulations and laws that are in place in your country.

5 Tips from CHI Software Engineers: How to Build Trust in Voice Recognition Software

As voice recognition is widely used to protect sensitive information and private data, you should think two steps ahead during voice authentication system development. CHI Software AI/ML experts advise the following:

These five steps can make your voice recognition system much more secure. Consider discussing these techniques before your development project starts.

1. Use a System with Numerous Identifiers

Relying solely on voice characteristics is insufficient for a reliable voice recognition system. Our team recommends building software that analyzes multiple aspects of a voice, such as pitch, tone, and rhythm, along with behavioral and physiological traits. Voice aspects blended with more identifiers like device details or location can give your system a more substantial security boost. Think of it as building layers of protection – the more, the better.

2. Use Multi-Factor Authentication

Voice authentication alone might not always be enough for reliable security. Consider adding more factors, like a one-time password (OTP) or facial recognition, that can make your system much harder to bypass. You may object that it’s more effort for users, but securing their sensitive data is an investment in your reputation and customer loyalty. Only remember to keep it smooth on the user’s side – no one likes juggling too many hoops.

3. Restrict the Number of Attempts

A hacker’s favorite playground is unlimited tries. By setting a limit on the number of voice authentication attempts, you can quickly shut down brute force attacks. Add a time delay after failed attempts, and you get a roadblock for intruders. Remember that security isn’t just about keeping bad actors out but also about frustrating them enough to give up.

4. Use Continuous Authentication

Continuous authentication ensures that the system monitors the user throughout the session. For example, if someone authenticates successfully but then starts speaking in a different tone or voice, the system can flag it. This extra layer of vigilance can be a lifesaver, especially in high-security environments.

5. Enable Liveness Detection

Spoofing attacks are a real threat – hackers can use voice recordings or synthetic voice clones to trick your system. Liveness detection can help you differentiate a live human voice from recordings or deepfake attempts. Detecting background noise, testing for conversational responses, or analyzing lip movements (if combined with video) strengthens this line of defense.

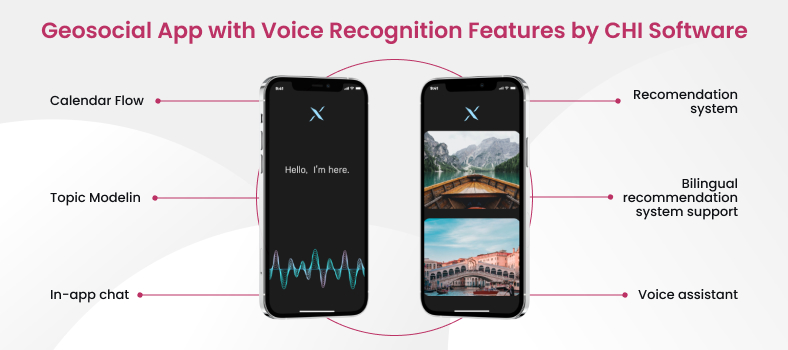

CHI Software Expertise: Voice Verification in Geosocial Networking

Voice assistants help us quickly provide personalized experiences to our customers when they use gadgets to shop, call their loved ones, or listen to music. But first, a device must identify who is speaking.

That is why the CHI Software AI/ML team made a voice recognition and transcription feature an organic part of our new project.

Our client wanted to make a new AI-powered app where people can share and find recommendations for different venues nearby, much like Yelp or Foursquare, but with a voice assistant. People often look for places while driving or on the move. So, the voice assistant was more than a feature, it was a necessity.

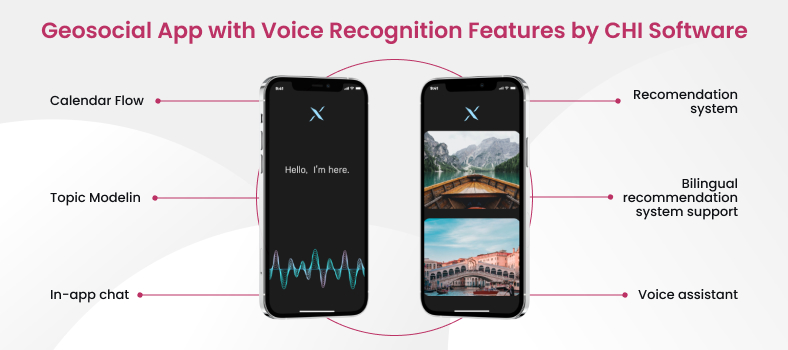

Our team developed a multifaceted solution. We included all the key features to simplify searching for places, events, or new friends:

- A dynamic calendar suggests nearby events based on the user’s current time and location;

- Topic channels tailored to a person’s interests;

- An in-app chat for easy socializing;

- A personal recommendations system suggesting venues and events;

- A voice assistant recognizes users’ voices, provides easy voice navigation, and translates street names and signs for better convenience.

Voice recognition add both security and interaction to the mobile user experience.

This blend of features resonated well with the audience and successfully boosted users’ engagement. As a result, the app has created a vibrant online hub for socializing enthusiasts.

You can explore more about the solution and its workflow in our detailed case study.

Conclusion

Voice recognition is already implemented in multiple industries, and the demand for new solutions is constantly on the rise.

The guide we’ve provided will help you in your development journey, yet this process might be too difficult for developers without proper expertise in artificial intelligence (AI). Luckily, you have found us! We at CHI Software will gladly guide you through the development and help you create the product just the way you envision it.

Contact us today, and we will make sure you receive quality service that is worth your money!

About the author

Alex is a Data Scientist & ML Engineer with an NLP specialization. He is passionate about AI-related technologies, fond of science, and participated in many international scientific conferences.

Rate this article

22 ratings, average: 4.5 out of 5